Hololense Training Application

A renowned multi-business conglomerate has established a well-defined global training program called “Gurukul,” aimed at equipping field operators with fundamental knowledge of the operation and maintenance of their large-scale equipment.

An AR tablet application was developed to assist end users in learning about the equipment’s functioning, maintenance, and troubleshooting, even within a classroom setting. This application ensures easy access to detailed, step-by-step guidance on every aspect of the equipment.

- Project Highlights

Bloom Gesture for HoloLens Navigation:

Feature: The Bloom Gesture is a specific hand gesture used to open the HoloLens main menu. Users open their hand from a closed fist to trigger this command. Impact: This gesture provides an intuitive and hands-free way to navigate the HoloLens environment, enhancing user immersion and accessibility.App Launch and Air-Tap Interaction:

Feature: After opening the main menu with the Bloom Gesture, the user can select the app to launch the augmented experience. Upon air-tapping on “Packing,” the user is directed to the machine type page. Impact: This seamless transition between gestures (Bloom to air-tap) makes the navigation process fluid and interactive, contributing to an efficient and user-friendly experience.2D UI Representation:

Feature: The screen displays a 2D user interface with selectable options such as “Making,” “Packing,” and “Electrical.” Impact: A clear and minimalistic 2D UI simplifies the decision-making process for the user, reducing complexity while allowing quick selection of operational categories.Default Taskbar:

Feature: The default HoloLens taskbar remains at the top of the screen, providing easy access to essential system controls. Impact: This persistent taskbar ensures the user has continuous access to standard HoloLens functionalities without disrupting the augmented reality experience.

- Bloom Gesture

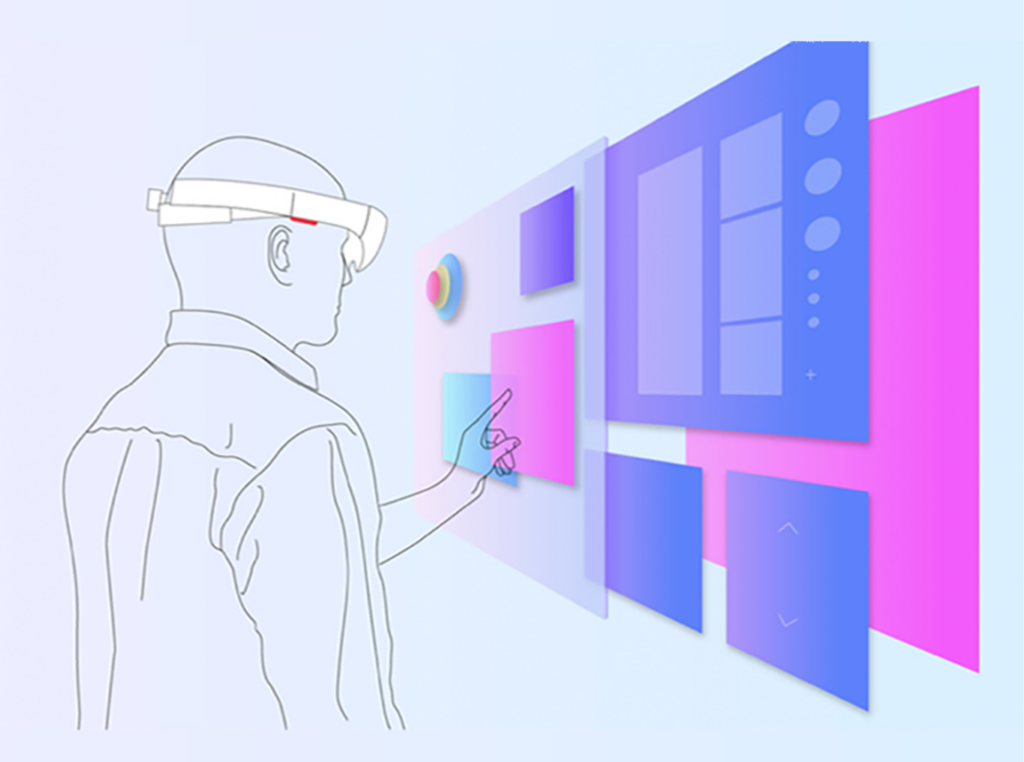

3D View Representation:

Feature: The application provides a 3D view of the machine interface where users can visually interact with processes like “Making,” “Packing,” and “Electrical.” Impact: The 3D representation enhances user engagement by creating a realistic and interactive environment. Users can clearly visualize various options, making navigation and learning more immersive.Machine Selection via Gaze and Tap:

Feature: Users can select a machine by gazing at it and confirming the selection with a simple air-tap gesture. Impact: This hands-free control feature adds to the convenience and efficiency of the application. It allows users to interact naturally with the environment without needing a physical controller.Global Navigation Bar:

Feature: The global navigation bar remains accessible throughout the application, floating at the bottom of the screen for easy access. Impact: This feature ensures that users can quickly switch between different functionalities or sections of the app without disrupting the flow, making the experience smooth and consistent.Back Button Functionality:

Feature: The back button allows users to return to the previous step, although it is disabled at certain points (like the initial screen) and becomes enabled when the user progresses further into the app. Impact: This provides a guided experience by controlling user actions when necessary, preventing confusion during the initial setup while maintaining flexibility in navigation as the user advances.Disabled Buttons for Machine Selection:

Feature: Other interface buttons are disabled until the user selects a machine. Impact: This prevents unnecessary interaction and guides the user to focus on the task at hand, streamlining the decision-making process and minimizing potential errors.

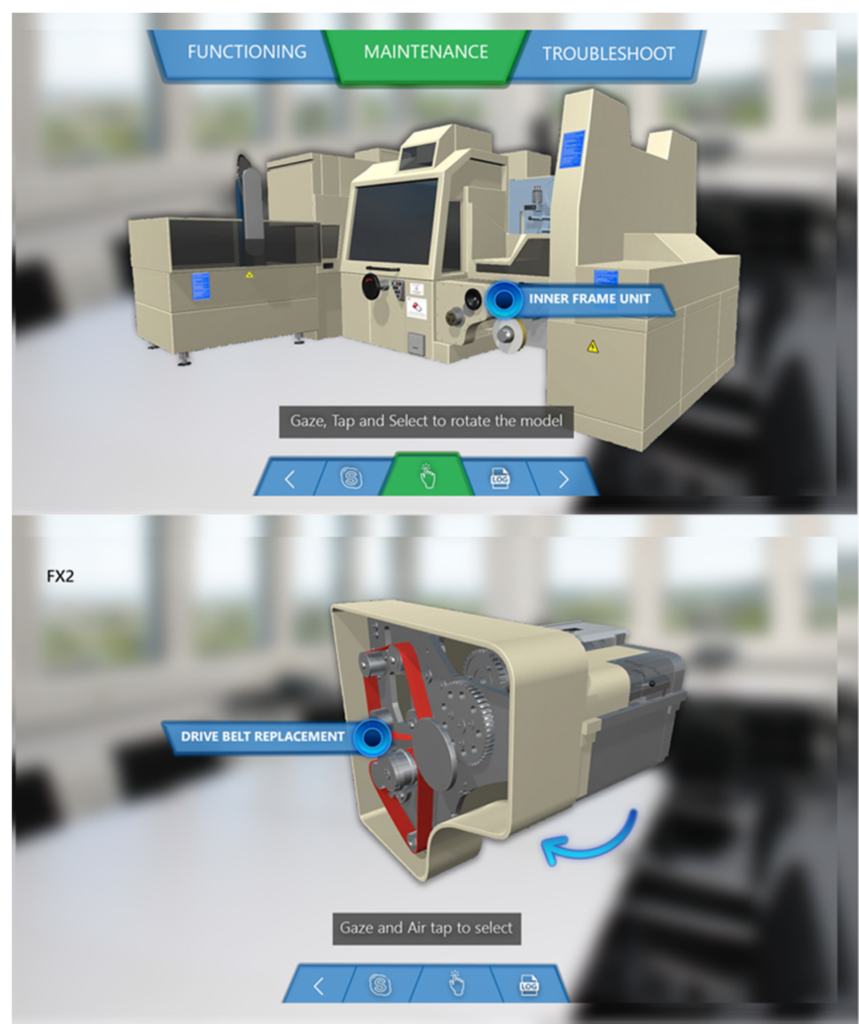

Augmented Reality (AR) Model:

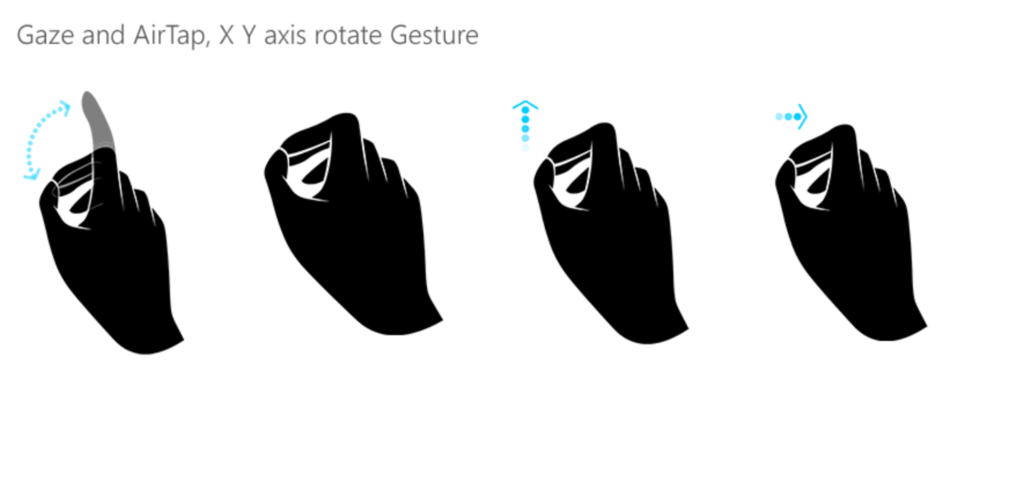

Feature: Upon selecting a machine, the entire model becomes augmented, which means the user can interact with a detailed, 3D representation of the machine. Impact: This allows users to visualize and work with a life-like, interactive model, improving learning and comprehension.Gesture-based Interaction:

Feature: Users can zoom in, zoom out, and rotate the 3D model using intuitive hand gestures, accessible via a dedicated gesture icon. Impact: The gesture-based controls offer a more immersive and user-friendly experience, allowing for precise control and exploration of the model without physical interaction with the device.Component Selection and Interaction:

Feature: The user is prompted to select specific components, such as the Inner Frame Unit or the Drive Belt Replacement. Impact: This step-by-step component selection makes the learning or troubleshooting process more guided and focused, helping users understand the internal mechanics of the machine.Hotspot Interactivity:

Feature: Once the 3D model is active, the user can interact with hotspots by using air tapping, which highlights key areas of the machine. Impact: Hotspots enhance the user’s interaction by providing actionable areas or specific information points, facilitating more in-depth exploration.3D Model Manipulation:

Feature: Through a green-highlighted gesture button, users can easily zoom and rotate the model to inspect it from various angles. Impact: This freedom to explore the model in 3D from different perspectives gives users a comprehensive understanding of the machine’s structure, which is especially useful for maintenance or troubleshooting.